Deploying a .NET application using Azure CLI sounds like an easy job. And it usually is. As with everything, sometimes problems occur, but with the help of Google (well, StackOverflow, mostly), you should be able to solve them. There was even a post on this very blog about troubleshooting issues after deployment to Azure App Service:

So why another post? One of the issues described in that post (specifically this one) has been haunting me for some time. The solution described there was to manually delete the offending DLLs from the App Service's filesystem and redo the deployment.

It would be fine if it had to be done once, but the issue came back several times, and the manual work was becoming tedious. And I wanted my deployments to work always - not just most of the time.

The problem

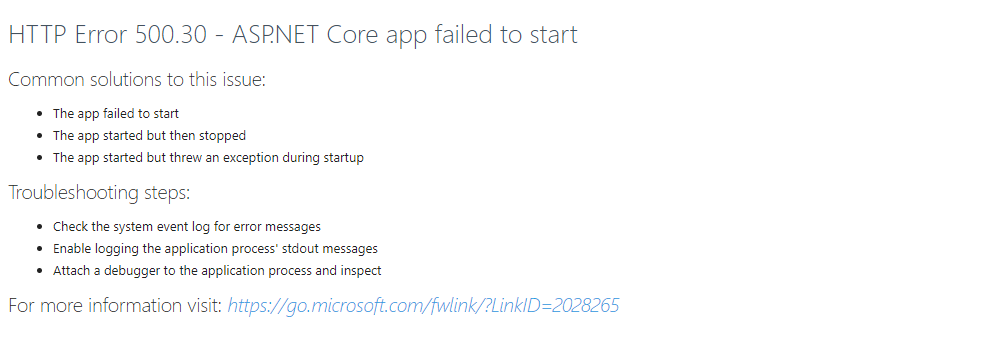

If you haven't read that article, here's a little summary of the problem at hand. After publishing the application with Azure CLI, the App Service is dead, and you're greeted with the following screen:

You check the App Service Event Log and you stumble upon this error:

<Event>

<System>

<Provider Name=".NET Runtime"/>

<EventID>1026</EventID>

<Level>1</Level>

<Task>0</Task>

<Keywords>Keywords</Keywords>

<TimeCreated SystemTime="2022-05-24T06:31:01Z"/>

<EventRecordID>213793516</EventRecordID>

<Channel>Application</Channel>

<Computer>DW0SDWK0002IE</Computer>

<Security/>

</System>

<EventData>

<Data>

Application: w3wp.exe

CoreCLR Version: 6.0.322.12309

.NET Version: 6.0.3

Description: The process was terminated due to an unhandled exception.

Exception Info: System.PlatformNotSupportedException: System.Data.SqlClient is not supported on this platform.

at System.Data.SqlClient.SqlConnection.Dispose(Boolean disposing)

at System.ComponentModel.Component.Finalize()

</Data>

</EventData>

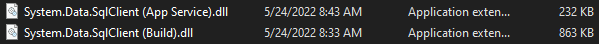

</Event>You compare the System.Data.SqlClient assembly present in the App Service filesystem against the one in your build artifact. They seem the same - they are created and modified on the same dates, target the same CPU bitness, and are the same version. But then, something catches your eye:

The size! These are not the same files at all!

Source of the problem

So why does this happen? How can a deployment leave an and file and not replace it with the clearly different one?

The answer is actually very simple. Deployments to App Service make use of Kudu, which uses a tool called KuduSync.NET to copy the deployment files to the webroot of your web application. By default, it only copies the files whose modification date is different to the one already present in the directory. No other file properties are verified, so the file is not replaced with the one from the build artifact.

In most cases, that will not be a problem. But it was for me and might be for you. I'll show you three approaches to fixing it forever.

Fix #1 (easy, but slow)

Deep in Kudu's issue tracker and project wiki, there's a mention of a similar issue:

Zip deploy does not update node module files for NPM 5.6 and later

[...] This affects the default zipdeploy behavior where it is optimized to only copy files that are changed. To workaround the issue, do set appSettingsSCM_ZIPDEPLOY_DONOT_PRESERVE_FILETIME=1. Zipdeploy files will always be updated and, as a result, overwriting the existing ones regardless of change.

This will indeed fix the problem, as all files will be replaced with the ones provided with the new deployment.

However, depending on the number of files in your artifact, the deployment may take much longer than before. Even the reporter of the issue resorted to using a different approach because of that:

I'm not sure if this was fixed, but last I tried using the flag to ignore the timestamps, deploying took an incredible amount of time.

You can find the implementation details here, and even in the code, there's a warning about this approach being non-optimised.

Fix #2 (fast, but complicated)

If you look closely at the Kudu deployment logs (available at https://<app-service-name>.scm.azurewebsites.net/api/deployments/<deployment-id/log), you will probably notice these log entries:

{

"log_time": "2022-09-13T15:55:03.4302161Z",

"id": "d5245675-dff3-4cc0-8cd0-f32463ba690d",

"message": "Generating deployment script.",

"type": 0,

"details_url": "https://<app-service-name>.scm.azurewebsites.net/api/deployments/<deployment-id/log/d5245675-dff3-4cc0-8cd0-f32463ba690d"

},

{

"log_time": "2022-09-13T15:55:03.6455988Z",

"id": "ea13335b-9c48-4cf6-843e-356b896e5667",

"message": "Running deployment command...",

"type": 0,

"details_url": "https://<app-service-name>.scm.azurewebsites.net/api/deployments/<deployment-id/log/ea13335b-9c48-4cf6-843e-356b896e5667"

},Kudu is a powerful tool that will generate a Windows Shell script that will perform the actual deployment. The default script basically boils down to this line:

call :ExecuteCmd "%KUDU_SYNC_CMD%" -v 50 !IGNORE_MANIFEST_PARAM! -f "%DEPLOYMENT_SOURCE%" -t "%DEPLOYMENT_TARGET%" -n "%NEXT_MANIFEST_PATH%" -p "%PREVIOUS_MANIFEST_PATH%" -i ".git;.hg;.deployment;deploy.cmd"There are two important points that will help us in fixing the our issue:

- The deployment script can be customised.

- KuduSync has more available parameters to be specified.

Let's start with customising the deployment script. To do that, you need to include a .deployment file at the root of your build artifact. If the file is present, Kudu will not generate the default script but will do what you instruct it to.

Regarding the additional KuduSync options: one is particularly interesting:

[Option("", "fullCompareFiles", Required = false,

DefaultValue = "web.config",

HelpText = "A semicolon separated list of file types to perform a full text comparison on instead of just a time stamp comparison. Wildcards are also accepted, example: --fullCompareFiles foo.txt;*.config;*.bar")]

public string FullTextCompareFilePatterns { get; set; }By combining these two, the problem can be fixed. I will advise you against creating the whole deployment script from scratch unless you really know what you are doing. In my opinion, it's best to start with the default one and adjust it to your needs.

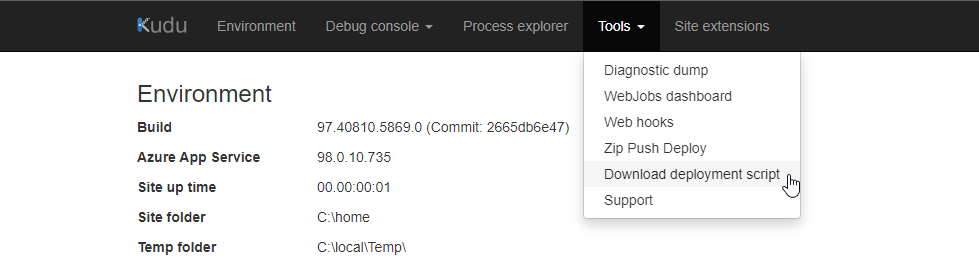

First, go to your App Service's Kudu portal located at https://<app-service-name>.scm.azurewebsites.net. Select Tools from the top menu and then Download deployment script:

This will give you an archive with two files:

[config]

command = deploy.cmd@if "%SCM_TRACE_LEVEL%" NEQ "4" @echo off

:: ----------------------

:: KUDU Deployment Script

:: Version: 1.0.17

:: ----------------------

:: Prerequisites

:: -------------

:: Verify node.js installed

where node 2>nul >nul

IF %ERRORLEVEL% NEQ 0 (

echo Missing node.js executable, please install node.js, if already installed make sure it can be reached from current environment.

goto error

)

:: Setup

:: -----

setlocal enabledelayedexpansion

SET ARTIFACTS=%~dp0%..\artifacts

IF NOT DEFINED DEPLOYMENT_SOURCE (

SET DEPLOYMENT_SOURCE=%~dp0%.

)

IF NOT DEFINED DEPLOYMENT_TARGET (

SET DEPLOYMENT_TARGET=%ARTIFACTS%\wwwroot

)

IF NOT DEFINED NEXT_MANIFEST_PATH (

SET NEXT_MANIFEST_PATH=%ARTIFACTS%\manifest

IF NOT DEFINED PREVIOUS_MANIFEST_PATH (

SET PREVIOUS_MANIFEST_PATH=%ARTIFACTS%\manifest

)

)

IF NOT DEFINED KUDU_SYNC_CMD (

:: Install kudu sync

echo Installing Kudu Sync

call npm install kudusync -g --silent

IF !ERRORLEVEL! NEQ 0 goto error

:: Locally just running "kuduSync" would also work

SET KUDU_SYNC_CMD=%appdata%\npm\kuduSync.cmd

)

::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::

:: Deployment

:: ----------

echo Handling Basic Web Site deployment.

:: 1. KuduSync

IF /I "%IN_PLACE_DEPLOYMENT%" NEQ "1" (

IF /I "%IGNORE_MANIFEST%" EQU "1" (

SET IGNORE_MANIFEST_PARAM=-x

)

call :ExecuteCmd "%KUDU_SYNC_CMD%" -v 50 !IGNORE_MANIFEST_PARAM! -f "%DEPLOYMENT_SOURCE%" -t "%DEPLOYMENT_TARGET%" -n "%NEXT_MANIFEST_PATH%" -p "%PREVIOUS_MANIFEST_PATH%" -i ".git;.hg;.deployment;deploy.cmd"

IF !ERRORLEVEL! NEQ 0 goto error

)

::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::

goto end

:: Execute command routine that will echo out when error

:ExecuteCmd

setlocal

set _CMD_=%*

call %_CMD_%

if "%ERRORLEVEL%" NEQ "0" echo Failed exitCode=%ERRORLEVEL%, command=%_CMD_%

exit /b %ERRORLEVEL%

:error

endlocal

echo An error has occurred during web site deployment.

call :exitSetErrorLevel

call :exitFromFunction 2>nul

:exitSetErrorLevel

exit /b 1

:exitFromFunction

()

:end

endlocal

echo Finished successfully.Modify line #64 to include the --fullCompareFiles flag with the list of files that are causing you problems, e.g.:

call :ExecuteCmd "%KUDU_SYNC_CMD%" -v 50 !IGNORE_MANIFEST_PARAM! -f "%DEPLOYMENT_SOURCE%" -t "%DEPLOYMENT_TARGET%" -n "%NEXT_MANIFEST_PATH%" -p "%PREVIOUS_MANIFEST_PATH%" -i ".git;.hg;.deployment;deploy.cmd" --fullCompareFiles "System.Data.SqlClient.dll"Then, make sure that both the .deployment and deploy.cmd are published to the root of your dotnet publish output directory.

Doing all this will fix the problem. The not-so-great thing about this solution is that you have to manually specify the offending DLLs in the deployment script.

For more information about customising the deployment script, make sure to visit Project Kudu's wiki:

Fix #3

It took me the longest to achieve this, but this finally gave me the satisfaction of knowing everything about the problem. The fixes mentioned above worked, but they were either slow or required maintenance. What brought me here was the question:

Why is there a different System.Data.SqlClient assembly in the App Service's webroot, and why is it so much smaller than the one from the build artifact?After some time, I noticed a pattern in the App Service startup issues. I was not the only person working on this project. The other developer is a Visual Studio user and has sometimes been using the Publish option in their IDE to publish the project directly to Azure. When they did that, the application started and ran just fine. But if I then did a deployment using Azure CLI, the error would return.

That got me thinking - how is the Visual Studio publish method different from the Azure CLI one? Does it use a custom deployment script? Does it remove all existing files from the webroot prior to copying the new files?

Surprisingly, no. It uses a different dotnet publish approach, which results in a slightly different build artifact.

If you head over to Microsoft's documentation about dotnet publish:

you will notice that there are two publishing methods available. Your .NET project can be deployed either as a framework-dependent deployment (FDD) or a framework-dependent executable (FDD). I was using .NET 5 and 6 SDKs to publish the project, which meant that I was publishing it as a framework-dependent executable (because that's the default since .NET Core 3.1).

There's also documentation regarding publishing .NET apps with Visual Studio at:

There you can see that Visual Studio publishes .NET projects as framework-dependent deployments.

But why does it matter? So the System.Data.SqlClient (and Microsoft.Data.SqlClient, too, by the way) is a library that depends on native assemblies targeting different platforms to do its work.

In FDD publishing mode, the native assemblies were put in a runtimes folder in the output directory and the System.Data.SqlClient would choose the appropriate one for the current OS. If no applicable native assembly was found, it would throw the System.PlatformNotSupportedException and prevent the application from starting.

In FDE publishing mode, however, the native assembly for the targeted platform (specified with the --runtime switch) would get merged into the System.Data.SqlClient.dll file. During the deployment, KuduSync would remove the runtimes directory from the webroot (because it wasn't present in the build artifact) but not replace the System.Data.SqlClient.dll file.

So in the end, the webroot contained the System.Data.SqlClient.dll from the FDD deployment, which required the native assemblies from the runtimes directory, which were not there, because the FDE deployment doesn't output them like that.

After understanding the issue, the fix was simple. I changed my dotnet publish usage from:

dotnet publish server/MyApp/MyApp.csproj -c Release --no-restore --runtime win-x86 --no-self-contained -o ./tmp/build-artifactto:

dotnet publish server/MyApp/MyApp.csproj -c Release --no-restore -p:UseAppHost=false -o ./tmp/build-artifactAnd it has not failed ever since. Now the application could be interchangeably published from the CLI and Visual Studio.

Apart from the proposed solutions, there is a lesson here. It's better to understand a problem first and then choose an appropriate solution than to go straight to applying workarounds.

Cover photo by Mariana B. on Unsplash